Connecting an AI agent to your backend is like handing the car keys to a genius who knows every possible route but has never passed a driving test. Without strict “traffic rules,” you’re not just taking a detour, you’re inviting full-blown chaos.

Today, more and more businesses are experimenting with plugging LLMs directly into backend systems. The reasons are clear: natural language interfaces make apps friendlier, and AI agents can automate processes in ways that traditional APIs never could. But this new power brings new risks unstructured, unpredictable output crashing into structured databases.

This article comes from our real-world work at DigitalBKK, where we’ve been building and testing AI-driven backends for SMEs and enterprises across Thailand and beyond. Here’s the proven approach we use to keep AI powerful, but never reckless.

1. Contracts Must Be Clear Not Just “Send JSON”

Simply telling an LLM to “return JSON” is like asking a chef to “make food.” You could get anything, fine dining or burnt toast.

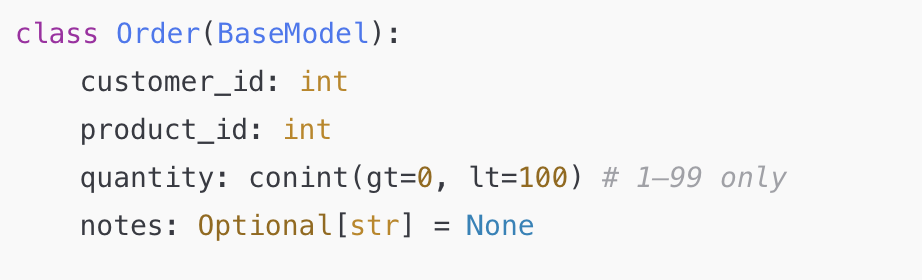

We define strict Pydantic models in FastAPI and expose them via OpenAPI. For example:

This isn’t a suggestion, it’s a hard rule. Every AI response is validated against this schema. If it doesn’t fit, it’s rejected before it ever touches the core system.

2. Use Repair Strategies, Not Blind Retries

When LLMs send slightly broken JSON (like extra commas or missing quotes), many teams retry the prompt. That wastes tokens and time.

Instead, we implement repair strategies with lightweight middleware that auto-corrects common issues:

Removing trailing commas

Wrapping naked keys in quotes

Merging fragmented arrays

This way, “almost correct” data becomes usable without looping endlessly.

3. Treat Function Calls as Real APIs

Never let an LLM invent operations. Every function call should map directly to an actual, documented backend endpoint, such as:

/orders/create/orders/cancel/orders/status

If an agent tries something like /orders/reverse_time, our backend validation immediately rejects it. That’s how you keep both security and logic intact.

4. Store Failures Like Gold

Invalid or malformed responses are not garbage, they’re golden feedback data. We capture them in a Dead Letter Queue with the original JSON intact.

This allows us to:

Identify recurring failure patterns

Improve schemas or repair strategies

Refine prompts and contracts for tighter control

Over time, this creates a feedback loop that makes the system smarter and more resilient.

Conclusion

AI agents talking directly to your database is one of the most exciting shifts in backend development. But success doesn’t come from hoping the AI will “get it right.” It comes from enforcing structure at every touchpoint.

Define strict contracts

Apply repair strategies instead of retries

Treat function calls as secure APIs

Learn from every failure

Do this, and you’ll turn unpredictable LLMs into reliable backend partners that extend your systems safely and powerfully.

Thinking about connecting AI agents to your business backend but not sure where to start?

Let’s talk:

LINE ID: @digitalbkk

Live chat: digitalbkk.com

Email: info@digitalbkk.com